You have a dataset, and you want to build an LLM to do interesting things with it.

But without a good dataset containing examples expected behavior that you can test your LLM’s answers against, it’s hard to know whether any of your LLM experiments are useful!

In this tutorial, we’ll learn how to generate an evaluation set (a list of questions, answers and contexts from your own corpus of data) – so you can get straight to running some LLM experiments.

The Dataset: Space Colonization

For this tutorial, I created a dataset of research papers and articles on Space Colonization. It will help us answer questions like – can humans live in space? Can permanent communities be built and inhabited off the Earth? What are the best candidates for the first space colonies? How should we design the colonization process?

You can use the same pipeline for any dataset.

We’ll first use ChatGPT to generate our evaluation dataset quickly.

Then we’ll improve our evaluation dataset with an LLM framework and generate questions mirroring actual production data.

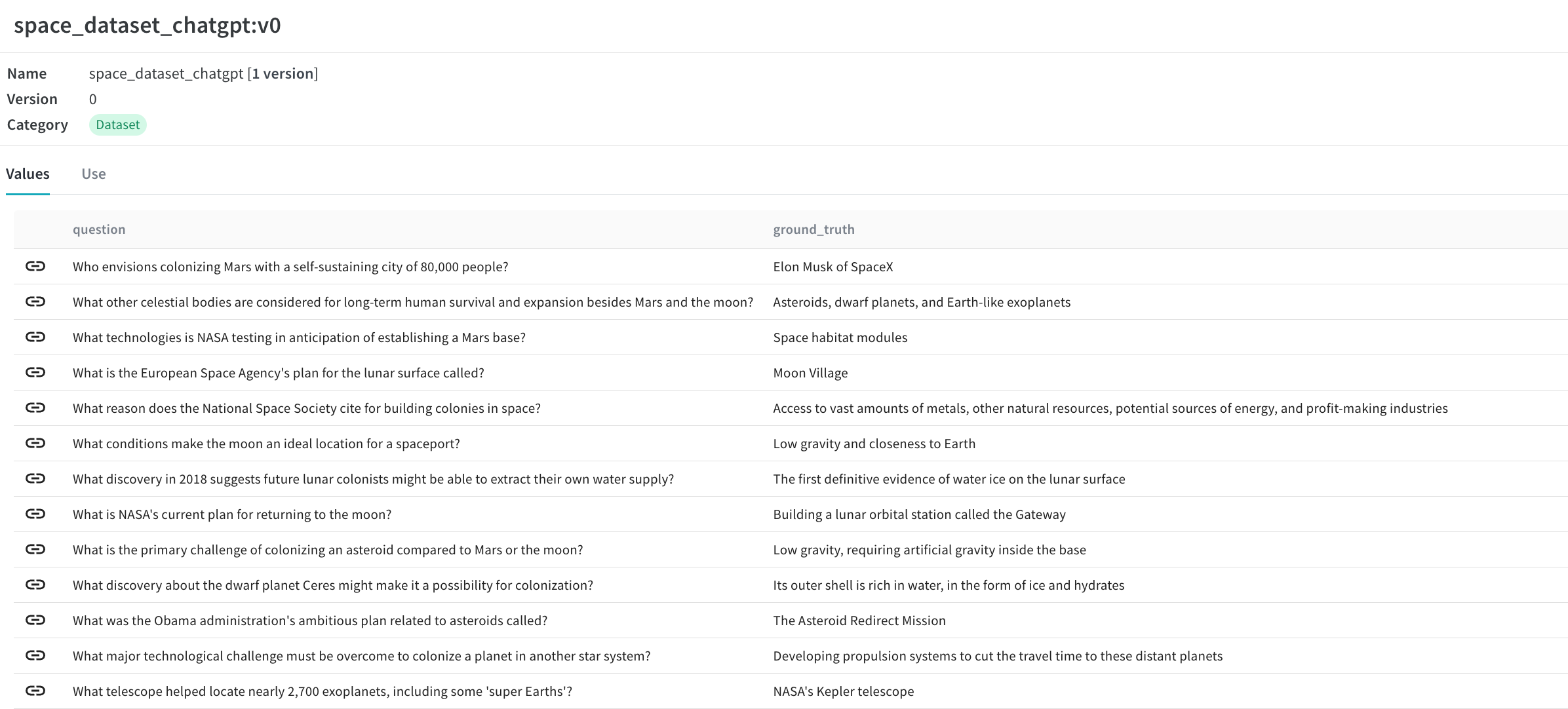

Using ChatGPT To Make An Evaluation Set

To get a lot of diverse questions quickly, you could show your corpus to ChatGPT and ask it generate some questions. This is much faster than doing it manually and it could work really well, specially if your questions follow a specific format.

✨ Here is a set of questions I generated using ChatGPT.

You can see the questions ChatGPT are not the most evocative – they’re quite simple, but still a big improvement from generating them manually!

However, if your corpus is big (e.g. years of research data) then ChatGPT’s context window isn’t big enough to hold them all (for now – in July 2024).

The other constraint is that we need our questions to be comprehensive, generate questions spanning most of the document, be of varying levels of difficulty etc. To overcome this we can use an LLM framework.

✨ You can use W&B Weave to log, view and compare your datasets as you try various techniques.

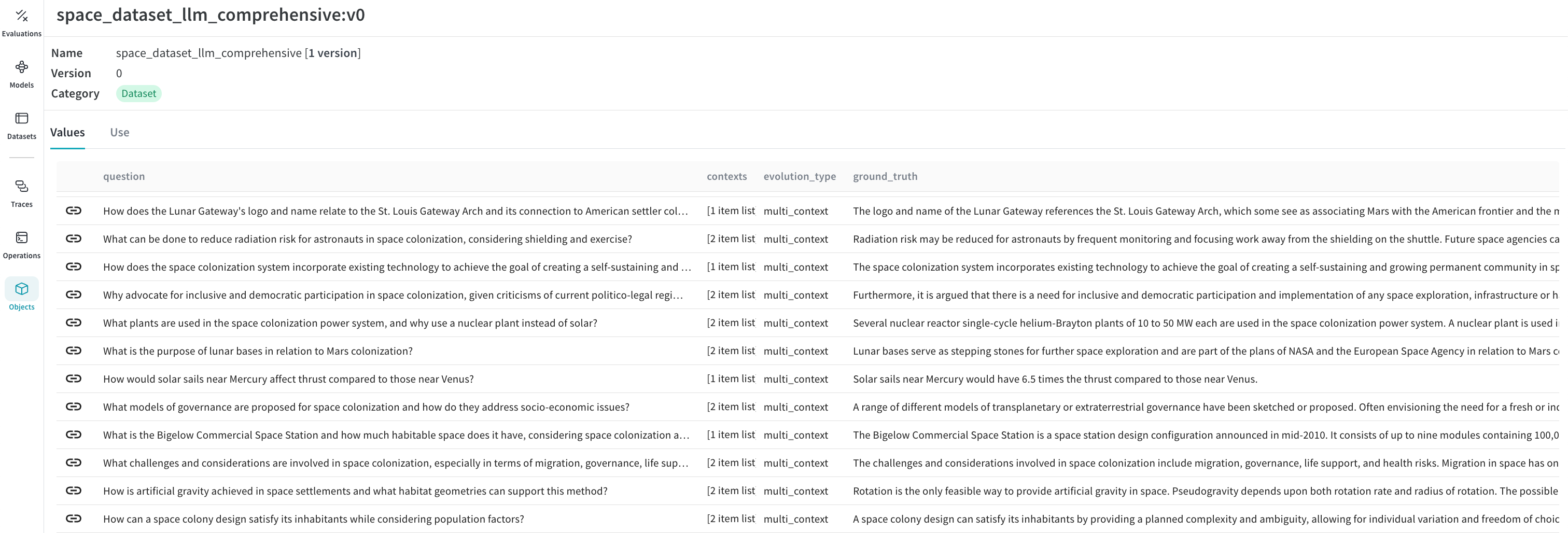

Use An LLM Framework To Craft More Realistic Questions

Frameworks like RAGAS let you systematically generate complex questions. They also let you tweak what types of questions you want to optimize for. For example, you can create questions that test if the LLM can do reasoning, or questions where the LLM needs information from multiple sections to answer the question.

This makes the questions more realistic.

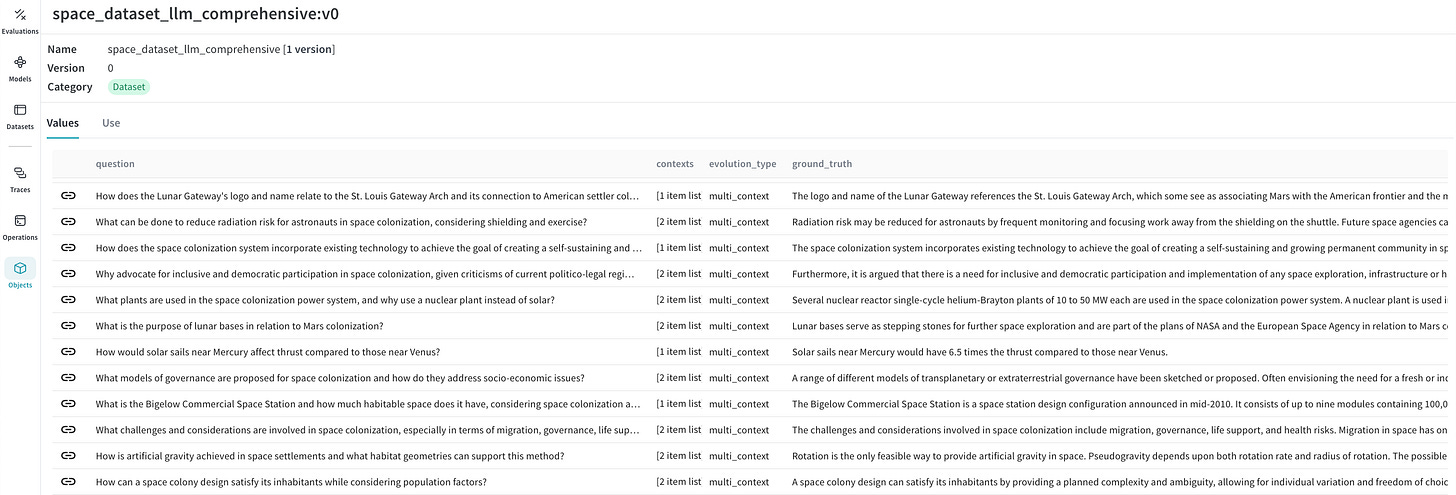

Below we see the questions I generated for my SpaceRAG application. You can see right away that these questions are a lot more complex, and representative of the types of questions people looking to learn more about this problem might ask. Also, see how for multi_context questions, the model mostly fetches more than 1 piece of relevant context.

Build Your Own Evaluation Dataset

Let’s walk through how to do this in code.

We first load out dataset (you can have multiple files in a variety of different formats and the folks over at LlamaIndex will slurp them up for you). Here I have a bunch of text and pdf files of papers and other research articles about Space Colonization in my folder data .

# Initialize Weave

weave.init('spacedata')

# load your documents

reader = SimpleDirectoryReader("./data")

documents = reader.load_data()Next up, we use GPT 3.5 to generate our questions, GPT=4 to provide feedback and let iterate on our generations.

# generator with openai models

generator_llm = ChatOpenAI(model="gpt-3.5-turbo-16k")

critic_llm = ChatOpenAI(model="gpt-4")

embeddings = OpenAIEmbeddings()

generator = TestsetGenerator.from_langchain(generator_llm, critic_llm, embeddings)What’s great about RAGAS is that it lets you define how much you want to weight complex reasoning, spanning the answer over multiple documents, conversational back and forth etc. in the questions you generate.

I love how simple they make it!

distributions = {simple: 0.3, multi_context: 0.4, reasoning: 0.3}

# use generator.generate_with_llamaindex_docs if you use llama-index as document loader

space_dataset = generator.generate_with_llamaindex_docs(documents, 200, distributions)

space_dataset_df = space_dataset.to_pandas()Next we log our dataset into Weights & Biases Weave.

# Create a dataset

dataset = Dataset(name='space_dataset_llm_comprehensive', rows=space_dataset_df)

# Publish the dataset

weave.publish(dataset)

# Retrieve the dataset

dataset_ref = weave.ref('space_dataset_llm_comprehensive').get()

# Access a specific example

# question = dataset_ref.rows[2]['question']✨ And now we can go to our Weave dashboard and check out the dataset!

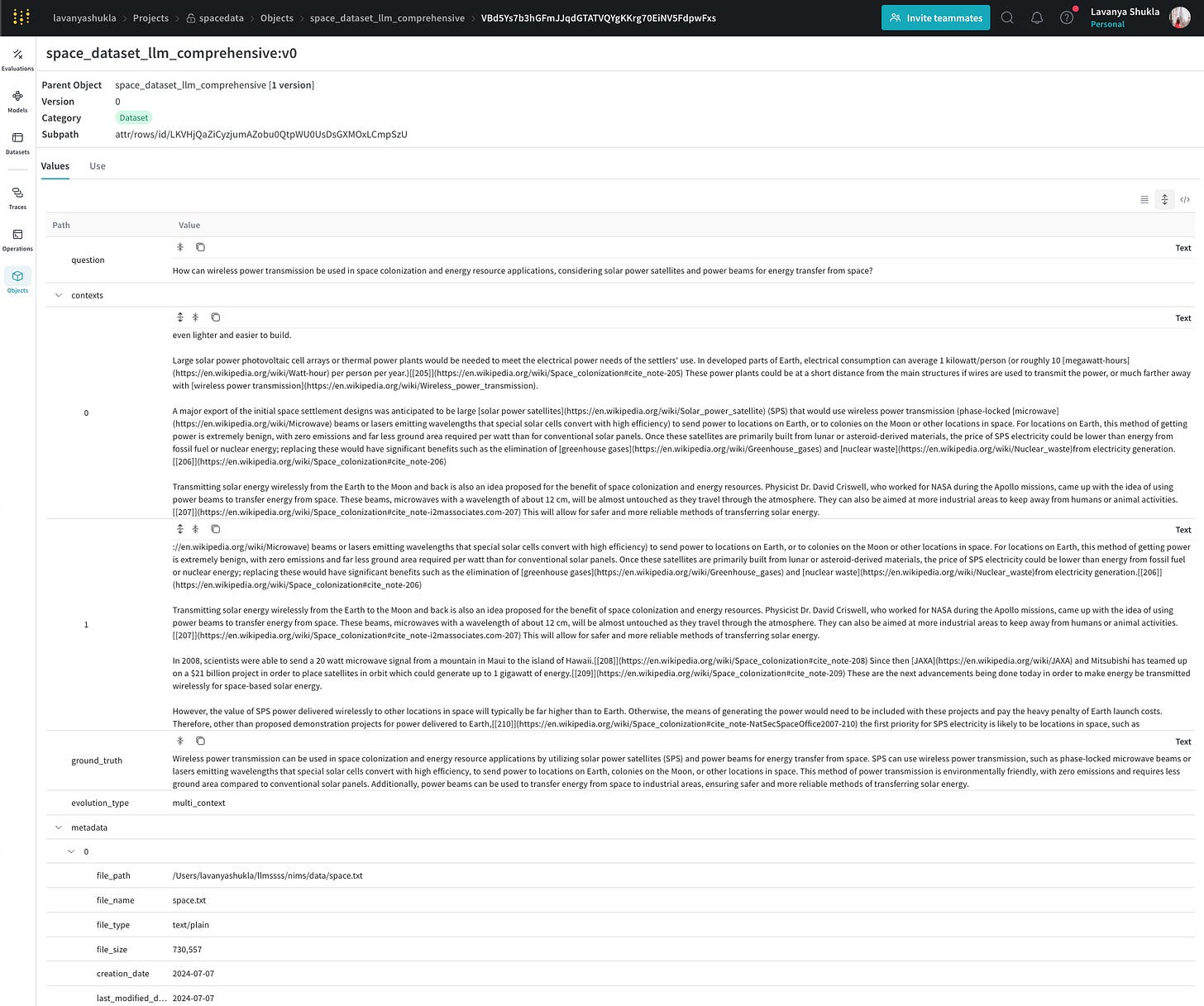

We could click into each row in Weave and see what context the model pulls the questions from. Try it yourself here.

And that’s all for this first tutorial.

You can now take any corpus of data and generate a good evaluation dataset from it! And you can use these datasets to evaluate both your retrievers and your generators for your RAG pipelines.

✨ You can use W&B Weave to log, view and compare your datasets as you try various techniques, and explore the dashboards we built in this blog post here.

You can try out the tools used here:

If you have any questions, comments or learnings from your own experiments with LLMs I’d love to hear from you in the comments!