Imagine an AI that doesn’t just guess at an answer, but thinks its way through every step—like the slightly chaotic inner monologue in our heads as we think through a problem.

That’s R1, an open-source language model from DeepSeek that merges extreme scale (671B parameters) with selective activation (~37B at a time). In doing so, it delivers step-by-step logical reasoning at a fraction of the usual cost for models of its size. DeepSeek further optimizes R1 by adopting mixed-precision floating points, ensuring efficient memory use and faster GPU throughput at such a large scale. Trained with reinforcement learning focused on step-by-step “chain-of-thought” logic, R1 doesn’t just guess—it thinks through each solution like a meticulous problem-solver. And because it’s fully open-source, anyone can inspect, modify, or run it locally, making R1 a game-changer in advanced reasoning, high efficiency, and complete transparency—ideal for researchers, developers, educators, and curious hobbyists alike.

In this post, we’ll explore:

The Innovation Behind R1

Is R1 Actually Cheaper?

Does R1 Make Inference Faster?

R1-0 vs. R1

How R1 Compares to Other Models

When You Should Use R1

How People Are Already Using R1

Technical Deep Dive

1. The Innovation Behind R1

A Focus on Reasoning

Most LLMs generate answers by pattern-matching—they’ve seen so many examples of text that they know which words are likely to follow. R1 attempts something bolder: it reasons. It adds another layer: a chain-of-thought approach. Internally, it breaks questions down into intermediate steps and carefully checks each move, much like a person solving a puzzle. This yields more coherent, logical outcomes in math, coding, and multi-step reasoning tasks.

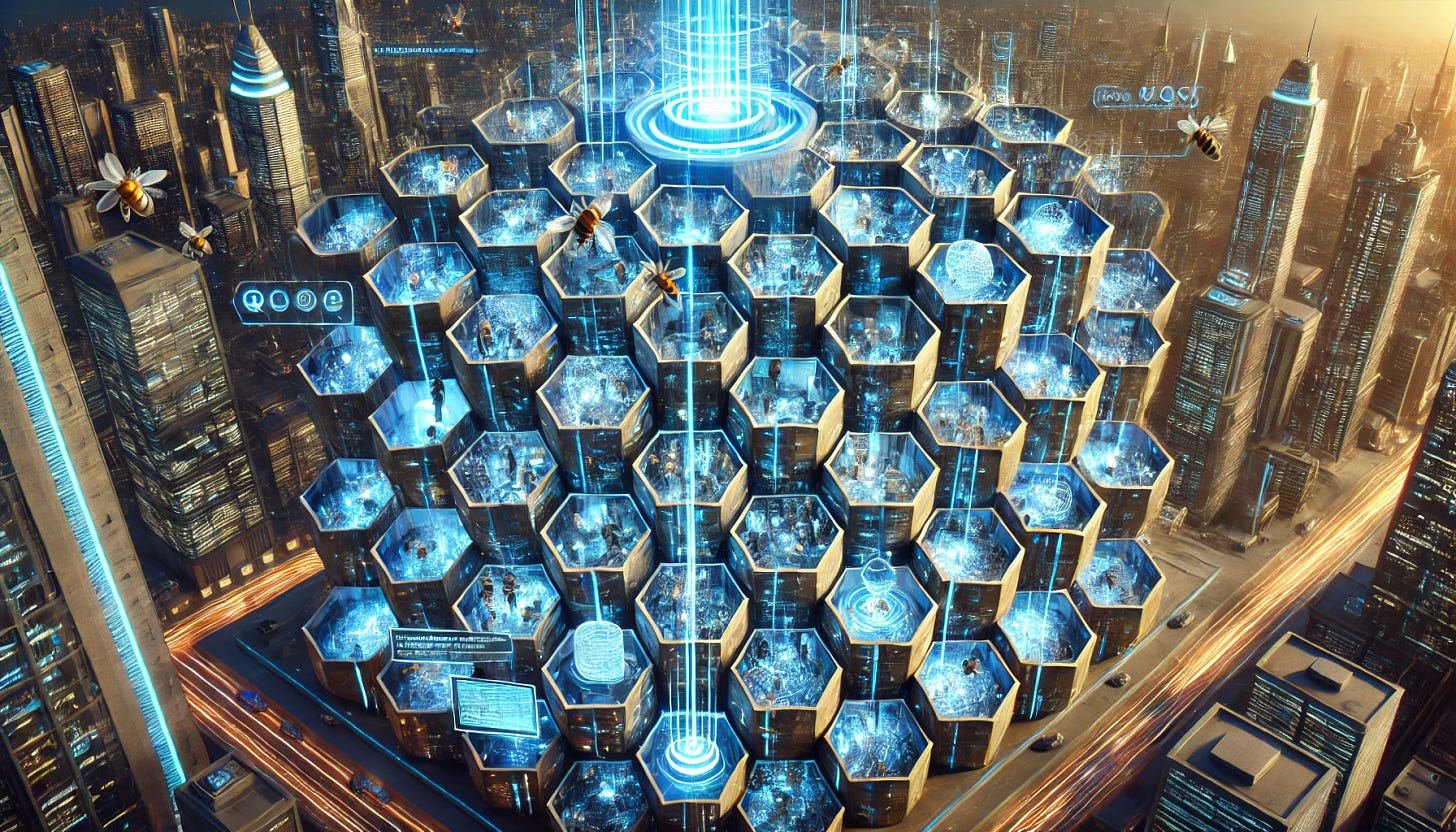

Mixture-of-Experts Architecture – A significant leap in efficiency

One of R1’s standout features is its Mixture-of-Experts (MoE) design. Instead of a single, dense set of 671B parameters, R1 is split into many “expert” subnetworks. Think of it as having many specialized “mini-models” under one roof. Only the relevant specialists come online for each part of the text. This lowers the compute load while giving the model a huge “brain” to draw on. When you ask R1 a question, it activates only the experts that are most relevant, effectively using ~37B parameters to process each token.

This “sparse” approach delivers:

High capacity: 671B total parameters let the model hold broad knowledge.

Lower compute cost: Only ~37B are used per token, cutting expenses drastically.

This ability to behave like a 37B model at inference, while having the knowledge of a 671B model is incredible! It can tap into specialized subnetworks, without incurring the memory and compute overhead, which is a significant leap forward in efficiency!

R1 is a tangible proof that Mixture-of-Experts (MoE) can work reliably at extreme scales when trained carefully. We’ve seen earlier MoE models like Google’s Switch Transformer demonstrate potential but often run into training stability issues. R1’s methodical balancing of experts and gating strategies is a great achievement, breathing new life into large-scale MoE research.

Having specialized experts in an MoE architecture also offers new ways to implement safety, by turning off or restricting certain experts they think are risky, system operators could enforce dynamic policy constraints in real time—an intriguing possibility for future large-scale AI governance.

Reinforcement Learning for Chain-of-Thought – A significant leap in reasoning

DeepSeek fine-tuned R1 using reinforcement learning (RL), rewarding the model for correct intermediate reasoning steps, not just final answers. This forced R1 to methodically test and refine its internal chain-of-thought. The result is a model that learns how to reason rather than simply memorizing text patterns.

The model is still fundamentally a sequence of learned probabilities, the middle step of “thinking out loud” greatly enhances R1’s problem-solving abilities, especially in math and code. This approach sets a precedent for how future models might be trained and evaluated on reasoning rather than just final answers.

Floating Point Precision Optimizations – A significant leap in efficiency

R1 pushes mixed-precision operations further than any large-scale models by assigning “precision tiers” to each component of the network. Rather than simply converting entire model layers to half precision, DeepSeek employs a nuanced, “tiered precision” strategy.

Most feed-forward math runs in half precision (FP16) or bfloat16 (BF16), drastically cutting memory use and boosting throughput. Also, gating mechanisms and high-variance transformations remain in full precision (FP32) to preserve numerical stability. Dynamic loss scaling and careful per-expert precision settings prevent gradient explosions or underflows. Due to this selective approach, developers can train and deploy R1’s 671B parameters with less hardware strain—without compromising accuracy.

These these carefully orchestrated precision tiers significantly cut GPU memory usage and speed up both training and inference without a noticeable drop in accuracy. This refined approach to mixed precision is especially valuable for multi-GPU setups, enabling more parallelism and larger batch sizes without blowing past VRAM limits.

These practices show that R1’s approach to mixed precision isn’t just an afterthought—it’s a core design pillar, enabling the model’s massive parameter count to remain cost-effective and accessible to a broader range of researchers and practitioners.

Open-Source and Community-Driven

Unlike proprietary models behind APIs, R1 is fully open. Anyone can download R1, run it locally or on their own server, inspect its code and architecture and modify it. This openness means the community has quickly jumped in to experiment and build on R1 – from running it in local chat apps to analyzing its capabilities on forums and blogs.

It’s a big step toward democratizing access to advanced AI. Researchers, startups, and even hobbyists can use R1 locally, without a paywall.

2. Is R1 Actually Cheaper?

Yes – remarkably so! Here’s why:

Sparse Activation = Lower Inference Costs

A large model typically means high inference costs: you must load and compute all parameters for every token. R1 breaks that rule, because it only uses ~37B parameters per token.

Less Server Time, Lower Electricity: Only the active experts work on each token.

Mid-Sized Model Compute: You get the capacity of 671B behemoth but effectively pay for ~37B each time.

Early adopters find R1 can be 50–85% cheaper per token: than models like GPT-4 or Claude. According to DeepSeek, running R1 was estimated at around $8 per 1 million tokens (inputs + outputs combined). In contrast, OpenAI’s top reasoning model, O1, costs about $15 per 1M input tokens and $60 per 1M output tokens.

Community-hosted R1 instances: sometimes offer near-free usage, further cutting costs.

Training Efficiencies

DeepSeek reportedly trained R1 on 14.8 trillion tokens—yet they contained costs by:

Using in-house hardware with optimized routing strategies.

Combining large pre-training with reinforcement learning, reducing the need for massive labeled datasets.

Carefully balancing experts so that all sub-networks get enough data to learn effectively (preventing “starved” experts).

Open Competition

Because R1 is open-source, multiple hosting providers can serve it. Unlike proprietary models (e.g., GPT-4), R1 isn’t locked behind one company’s pricing. Competition among hosts helps drive down usage costs. You can also self-host, sidestepping monthly fees entirely.

3. Does R1 Make Inference Faster?

Yes, in many scenarios. Only a fraction of R1’s parameters activate per token, so it’s effectively a 37B-parameter model at runtime. This can be twice as fast (or more) as dense models with 70B+ parameters, depending on hardware and optimizations.

The process of picking experts is relatively quick compared to the huge cost of running all parameters in a dense model. So, any additional overhead from routing queries to the right experts is minimal compared to the savings from not running the entire network.

Additional features like quantization or distillation can further boost speed, making R1 suitable even for higher-throughput applications. In practice, R1 can outpace models like LLaMA 70B or Mistral 123B, especially when well-optimized.

4. R1-0 vs. R1

If you’ve heard about R1, you might also come across something called R1-0, and wondered how is it different from the R1? R1-0 (or R1-Zero) was DeepSeek’s initial experiment, testing if an AI could learn complex, step-by-step reasoning purely through reinforcement learning—no supervised data. It surprised everyone by developing strong logic on its own, even pausing longer for harder problems, but the outputs were messy—mixing languages, rambling, and lacking clarity.

To fix this, DeepSeek refined R1-0 into R1:

Supervised Fine-Tuning: They fed it curated examples of high-quality solutions, teaching it to present answers more neatly.

Broader Training: A wider data mix (writing, dialogues, math checks) gave it versatility beyond puzzle-solving.

Final RL Pass: Reinforcement Learning from Human Feedback (RLHF) ensured tone, style, and factual accuracy.

Result: R1 retains R1-0’s deep reasoning but adds polished, well-structured outputs. It can explain its logic using internal <thinking> tags, stick to one language, and handle a range of tasks. Essentially, R1 is R1-0 2.0—the production-ready version that combines raw reasoning power with user-friendly presentation. Both models share the same architecture, showing that reasoning skills can be taught via RL and even distilled into smaller models later.

A deeper dive (for those interested)

Both R1-Zero and R1 share the same large Mixture-of-Experts architecture (hundreds of billions of parameters) but differ in training strategy. R1-Zero relied on reinforcement learning alone from the start, while R1 added a supervised fine-tuning phase (it fed the model explicit examples of correct outputs, ensuring it learns to produce structured, accurate answers) plus RLHF to polish the model’s responses for real-world use. This two-stage approach proved that reasoning ability isn’t tied to any specific architecture: given enough scale and a suitable reward signal, a model can learn to reason step-by-step—even without supervised data at first.

DeepSeek’s distillation work also shows you can transfer these RL-trained reasoning skills into smaller, dense models that don’t use MoE at all. So the logic routines acquired by R1-Zero (and refined in R1) aren’t exclusive to one architecture or parameter count; they can be “taught” to lighter models with far fewer parameters. This opens the door to R1-like reasoning in scenarios where large MoE infrastructure may be impractical, without losing too much of the step-by-step intelligence.

5. How R1 Compares to Other Models

GPT-4 / “o1” (OpenAI)

Strength: GPT-4 leads in many language tasks and has wide domain coverage.

R1’s Edge: Matches or exceeds GPT-4 on math, logic, and puzzle benchmarks. Costs much less.

Trade-Off: GPT-4 may have a broader training corpus with exclusive data. Still, R1’s open nature and chain-of-thought RL make it highly appealing for reason-centric use cases.

LLaMA 2 (70B, Meta)

Strength: LLaMA 2 is an open 70B model with good general capability.

R1’s Edge: R1 leverages 671B total parameters, but only ~37B for each token. It often outperforms LLaMA 2 in multi-step reasoning tasks.

Speed: R1’s effective size is about half of LLaMA 70B at inference, so it can be faster in practice.

Mistral 123B (Mistral AI)

Strength: 123B dense parameters, also open-source, strong general performance.

R1’s Edge: MoE approach means R1 can scale up “total” knowledge beyond 123B but still remain cost-effective. RL training often gives R1 better stepwise logic.

Trade-Off: Mistral’s single, dense block might handle some tasks well without gating overhead, but it usually pays a bigger cost per query.

Claude 2 (Anthropic)

Strength: Safe, policy-aligned chat with large context windows.

R1’s Edge: Similar or larger context window (up to ~128k tokens) and cheaper/faster for coding or math.

Trade-Off: Claude might excel in certain conversational subtleties, but R1’s chain-of-thought approach can be more reliable for logic-intense tasks.

6. When You Should Use R1

Complex Problem-Solving

Math proofs, logic puzzles, multi-step tasks where you want the system to “explain its thinking.”

R1 rarely “short-circuits” because it systematically checks each phase.

Coding & Debugging

R1 excels at walking through code line by line, spotting logical bugs, and explaining how to fix them.

Reinforcement learning strengthens its ability to parse algorithmic steps.

Analytical Writing & Explanation

Detailed breakdowns of historical events, scientific phenomena, or complex topics.

R1’s internal chain-of-thought fosters more coherent, structured narratives.

Decision Support

Multi-factor analysis for business strategy, resource allocation, or scheduling.

The model can weigh pros/cons and show how it arrived at each conclusion.

Educational Tools

R1 can tutor by showing work step by step, ideal for math or science learners.

Students benefit from the chain-of-thought logic.

Long-Context Scenarios

With up to ~128k tokens, R1 can process entire documents, codebases, or transcripts.

Summaries, Q&A, and cross-referencing data become more reliable when the model “sees” all relevant parts.

7. Data and Privacy Concerns

Training Data

14.8 Trillion Tokens: R1 was pretrained on a massive text corpus, likely including internet data, books, and code.

Open Transparency: R1’s open release allows for more scrutiny than closed models; researchers can investigate or mitigate memorized private info.

Potential Biases: Like all big LLMs, it may inherit biases from the training data. RL and alignment steps aim to reduce harmful outputs.

Usage Privacy

Self-Hosting: Keep all queries and data on your own servers or local machines—vital for regulated industries.

Hosted Services: Multiple third-party providers might log requests. Evaluate their data-handling policies.

Alignment & Safety: The base R1 is tuned to refuse malicious or private queries, but open-source means individuals can fine-tune it differently.

8. Technical Deep Dive

Floating Point Precision Optimizations

While FP16 is common in many LLMs, DeepSeek also experimented with BF16 (Brain Float) and dynamic loss scaling:

BF16: Helps reduce numerical overflows/underflows relative to FP16, especially for extremely large models.

Layer-Specific Precision: Some layers crucial for alignment or gating run at higher precision (FP32 fallback) to ensure stable training signals.

Mixed-Precision Kernels: Specialized GPU kernels keep performance high, letting R1 handle 671B parameters (sparsely) without hitting memory limits.

Mixture-of-Experts (MoE)

How It Works

Each MoE layer contains multiple “expert” feed-forward networks.

A gating mechanism chooses which experts handle a given token.

Only those experts “activate,” yielding sparse forward passes.

Why It Matters

Scaling: You can keep adding experts to pack more knowledge into the model without ballooning inference costs.

Specialization: Some experts might become very good at math, others at coding, others at everyday conversation.

Efficiency: R1 runs more like a 37B model in practice, rather than a 671B one.

Chain-of-Thought Reinforcement Learning

Multi-Stage Training

Pretraining: R1 learns general patterns from trillions of tokens.

RL Fine-Tuning: R1 attempts multi-step tasks, gets rewarded or penalized based on correctness of each step.

Supervised Polish: Additional labeled data ensures readable, structured outputs.

Alignment & Safety: Guidance to avoid disallowed content or factual errors as much as possible.

Results

R1 “thinks out loud” internally, evaluating partial solutions.

It’s less likely to produce superficial guesses for complex tasks.

Step-by-step reasoning is more transparent and consistent, improving reliability.

Resurgence of MoE?

Google’s Switch Transformer and GShard projects pioneered trillion-parameter MoE designs, but they faced difficulties with training stability and overhead. R1 refines these ideas, showing MoE can work at scale with:

Elastic Expert Utilization: If one expert is overwhelmed (e.g., for math-heavy prompts), R1 adjusts the gating thresholds to maintain stable coverage. This prevents “dead experts” and helps each expert improve at its specialty over time.

Advanced Hardware: Fast interconnects to handle parallel experts.

Chain-of-Thought RL: Consolidating knowledge from many experts into a cohesive reasoning process.

Dense Large Models vs. R1

Could LLaMA 70B or Mistral 123B do the same?

Chain-of-Thought: Yes, they can adopt RL-based reasoning training. Distilled R1 versions prove you can teach a dense model similar logic.

MoE Efficiency: Hard to retrofit. Dense models can’t suddenly “switch on” MoE without major retraining.

Scaling: At high parameter counts, R1’s sparse approach is more cost-effective than pushing all parameters every time.

Combined, these refinements keep training and inference more stable and efficient, especially at R1’s massive scale.

Conclusion

R1 signals a new era of reasoning-centric AI. It pairs an enormous (671B) knowledge capacity with MoE so you only activate ~37B parameters per query. The addition of reinforcement learning on chain-of-thought data result is an AI that doesn’t just answer but explains each step along the way—whether debugging code, solving difficult math, or analyzing large documents. And because of its sparse design and optimized floating point handling, it stays cheaper and faster than similarly powerful dense models.

By releasing R1 open-source, DeepSeek brings advanced reasoning AI from the walled gardens of closed providers into the hands of innovators everywhere. Researchers use it to push benchmark scores higher, startups adopt it to cut costs, educators employ it to show step-by-step solutions, and hobbyists tinker with local versions to see how R1 “thinks.”

With R1, the barrier to serious AI reasoning has dropped. At the same time, it underscores a broader shift: we’re moving away from shallow text generation to truly reasoned AI. R1 isn’t the end-state—there will be R2, R3, and beyond. But it’s a milestone proving that large-scale, thought-based models can be efficient, transparent, and open. If you need an AI that does more than recite facts—one that explains and verifies each step—then R1 is worth your attention.