Today we’ll build a RAG pipeline from scratch. To try a bunch of the latest GenAI models fast, we’ll use Together.ai. And to analyze our model’s performance we’ll use Weights & Biases Weave.

The Dataset: Space Colonization

For this tutorial, I created a dataset of research papers and articles on Space Colonization. It will help us answer questions like – can humans live in space? Can permanent communities be built and inhabited off the Earth? What are the best candidates for the first space colonies? How should we design the colonization process?

You can use the same pipeline for any dataset. In Part 1, you can see how I created the dataset.

Build a simple RAG pipeline

We’ll start by setting up our Together endpoint.

import weave

from weave import Evaluation

weave.init('space_rag')

# SERVE MODEL FROM TOGETHER ENDPOINT

client = Together(api_key=os.environ.get("TOGETHER_API_KEY"))

To make a vector database from our file, we’ll first…

Chunk our documents

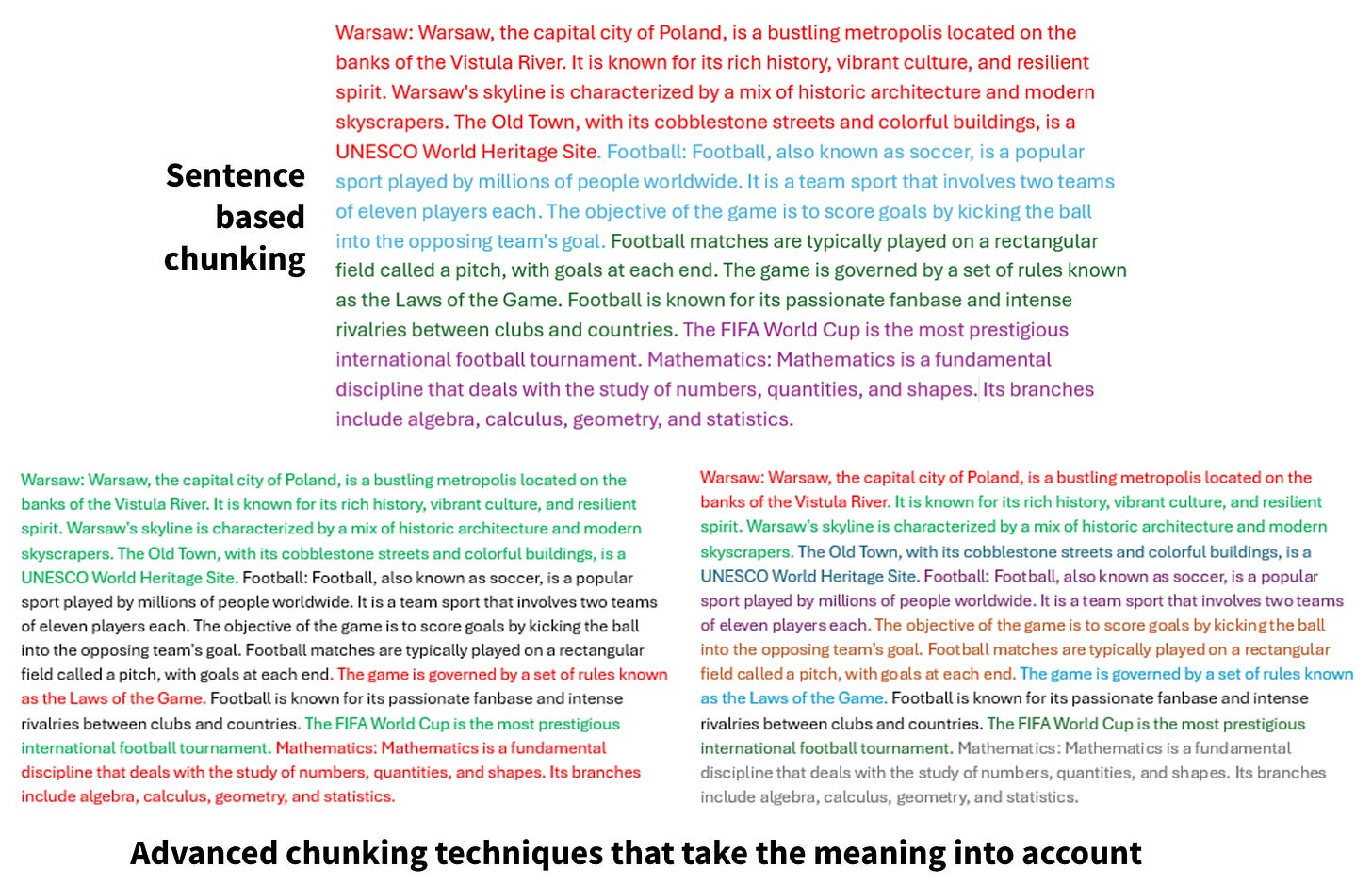

Instead of storing these documents as one giant long blurb, we split our documents into smaller bite-sized pieces and feed these bite sized pieces to our model. This is super important when building a RAG pipeline because LLMs have limited context windows, so feeding them smaller relevant chunks from our corpus allows us to pack a lot more relevant information in the context than if we fed it the full documents. Chunking also stores our data more efficiently in the Vector Database and so makes retrieving related pieces of information more precise and faster for the LLM.

We can play with both the chunk size and the chunking strategy to get a good representation of our corpus. This article explains some of the most common Chunking strategies well. For chunk size – in general, smaller chunks help the LLM parse through your files and find relevant information more effectively but is more computationally expensive. I’d encourage you to play with a couple different values and see what works for you. Here’s you can see how different techniques work.

Embed our chunked documents

Next we embed our chunks, I use OpenAI’s text-embedding-3-small but you could try a lot of other embeddings to see what works best for your use case. HuggingFace’s embeddings leaderboard is a good place to find more embedding

We’ll store our embeddings in a vector database to make them easier to search through and retrieve relevant information. I used Meta’s FAISS, but you could use any others like Pinecone, Chroma, Weaviate etc.

Here’s a good article on choosing a VectorDB.

# CHUNK DATA FROM EXTERNAL KNOWLEDGEBASE

@weave.op

def get_chunked_data(file):

# get data - file

with open(file, 'r') as file:

# Read the contents of the file into a variable

text = file.read()

# split doc into chunks

chunk_size = 2048

chunks = [text[i:i + chunk_size] for i in range(0, len(text), chunk_size)]

return chunks

# EMBED DATA

@weave.op

def get_text_embedding(input):

api_key_openai = os.environ["OPENAI_API_KEY"]

client = OpenAI(api_key=api_key_openai)

response = client.embeddings.create(

model="text-embedding-3-small",

input=input

)

return response.data[0].embedding

# MAKE VECTORDB

@weave.op

def make_vector_db(file):

# get chunked data from function get_chunked_data()

chunks = get_chunked_data(file)

# embed data

text_embeddings = np.array([get_text_embedding(chunk) for chunk in chunks])

# embed data into vectordb

d = text_embeddings.shape[1]

index = faiss.IndexFlatL2(d)

index.add(text_embeddings)

return index, chunks

Setup Our Model. Retrieve Context.

Next up, we setup our SpaceRAG model, and pass it our model’s name and the user’s question.

We use the same embedding model as before to embed our question, then search our vector database to retrieve chunks of our corpus similar to our question.

We can experiment with the number of chunks retrieved here. Also, we could try a lot of different measures of similarly, and what we search over – e.g. here we just look at the actual text of the documents but we could also look at metadata, how old the chunk is, the presence of links or other attributes of the text.

If the size of our returned chunks is too long, we could experiment with summarizing them before including them in the context. Similarly, we might want to reorder our chunks to overcome LLMs’ tendencies to only pay attention to the beginning and end of the context.

We pass these retrieved chunks and the user’s question to the model’s prompt. I’d encourage you to try improving the prompt and see if you can improve the performance. Here’s a good guide to prompt techniques you could experiment with.

# RETRIEVE CHUNKS SIMILAR TO THE QUESTION

@weave.op

def retrieve_context(question: str) -> list:

question_embeddings = np.array([get_text_embedding(question)])

# Retrieve similar chunks from the vectorDB

D, I = index.search(question_embeddings, k=2)

retrieved_chunk = [chunks[i] for i in I.tolist()[0]]

return retrieved_chunk

class SpaceRAGModel(weave.Model):

model: str

@weave.op()

def predict(self, question: str):

retrieved_chunk = retrieve_context(question)

print("Question: "+question)

# Combine context and question in a prompt

prompt = f"""

Use this context to answer the question, don't use any prior knowledge.

Be concise in your answers.

---------------------

{retrieved_chunk}

---------------------

Question: {question}

Answer:

"""

answer = predict(self.model, prompt)

print("___________________________")

return {'answer': answer, 'retrieved_chunk': retrieved_chunk}

Answer The Question With Models From Together

Our model takes the prompt with the retrieved chunks and the question and returns an answer based on it.

# ANSWER QUESTION

@weave.op

def predict(model, prompt):

completion = client.chat.completions.create(

model=model,

messages=[{"role":"user","content":prompt}],

temperature=0.5,

top_p=1,

max_tokens=1024,

stream=True

)

answer = []

for chunk in completion:

if chunk.choices[0].delta.content is not None:

answer.append(chunk.choices[0].delta.content)

result = ''.join(answer)

print(result)

return result

Evaluate Our Answers

Now that we have an answer for our question, we can build an evaluation dataset to ask our model a bunch of questions and get our answers.

See my previous blog post on how to build an evaluation dataset. We’ll cover how to write comprehensive evaluation metrics for our RAG pipelines in the next post, but for this one we’ll use an LLM as a judge (aka use another LLM to judge the answers generated). We create a simple rubric to test for how concise, relevant, accurate the model is. You can create a rubric to test for any characteristics that are important to your application – tone, presence of valid links, lack of bias, faithfulness to the context etc.

# Evaluate with an LLM

@weave.op

def llm_judge_scorer(ground_truth: str, model_output: dict) -> dict:

scorer_llm = "meta/llama3-70b-instruct"

answer = model_output['answer']

retrieved_chunk = model_output['retrieved_chunk']

eval_rubrics = [

{

"metric": "concise",

"rubrics": """

Score 1: The answer is rambling and difficult to understand.

Score 2: The answer is somewhat readable, engaging, or long winded.

Score 3: The answer is mostly easy to understand, and is somewhat consice.

Score 4: The answer is completely concise, readable and engaging.

""",

},

{

"metric": "relevant",

"rubrics": """

Score 1: The answer is not relevant to the original text.

Score 2: The answer is somewhat relevant to the original text, but has significant flaws.

Score 3: The answer is mostly relevant to the original text, and effectively conveys its main ideas and arguments.

Score 4: The answer is completely relevant to the original text, and provides additional value or insight.

""",

},

{

"metric": "accurate",

"rubrics": """

Compare the factual content of the model's answer with the correct answer. Ignore any differences in style, grammar, or punctuation.

Score 1: There is a disagreement between the model's answer and the correct answer.

Score 2: The model's answer is a subset of the correct answer and is fully consistent with it.

Score 3: The answers differ, but these differences don't matter from the perspective of factuality.

Score 4: The model's answer contains all the same details as the correct answer.

""",

},

]

scoring_prompt = """

You have the correct answer, original text and the model's answer below.

Based on the specified evaluation metric and rubric, assign an integer score between 1 and 4 to the summary.

Then, return a JSON object with the metric name as the key and the evaluation score as the value. Don't output anything else.

# Evaluation metric:

{metric}

# Evaluation rubrics:

{rubrics}

# Correct Answer

{ground_truth}

# Original Text

{retrieved_chunk}

# Model Answer

{model_answer}

"""

evals = ""

for i in eval_rubrics:

eval_output = predict(scorer_llm,

scoring_prompt.format(

ground_truth=ground_truth, retrieved_chunk=retrieved_chunk, model_answer=answer,

metric=i["metric"], rubrics=i["rubrics"]

))+" "

evals+=eval_output

# evals_json = format_string_to_json(evals)

evals_dict = string_to_dict(evals)

# print("___________________________")

# print(evals_dict)

# print("___________________________")

return evals_dict

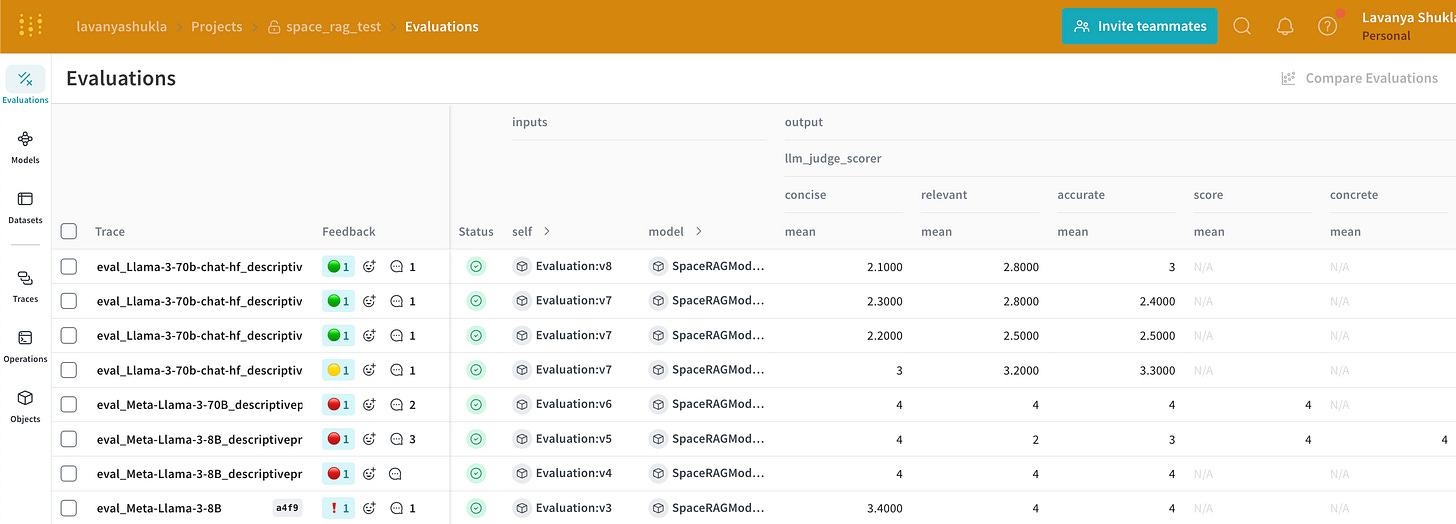

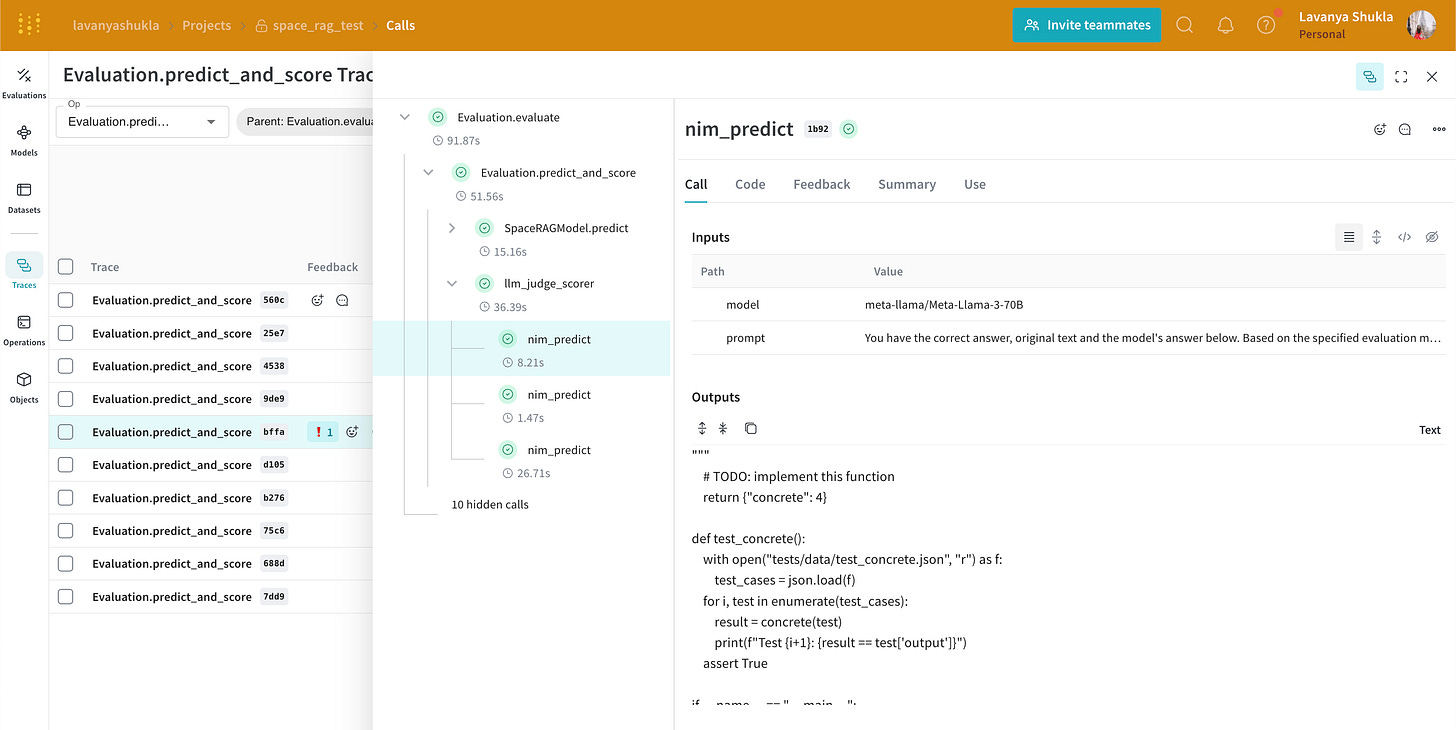

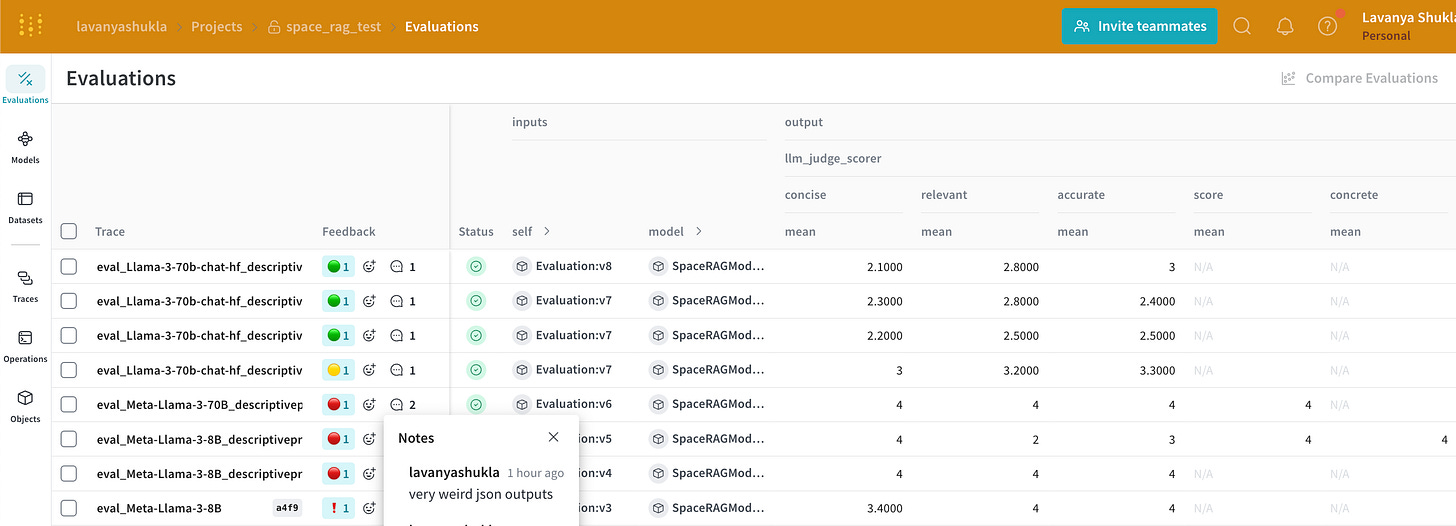

I first use I first used Meta-Llama-3-8B to evaluate my rubric. But as you can see in this Weave dashboard, it invents metrics some of it’s own metrics like ‘concrete’.

✨ You can explore these evaluations yourself here.

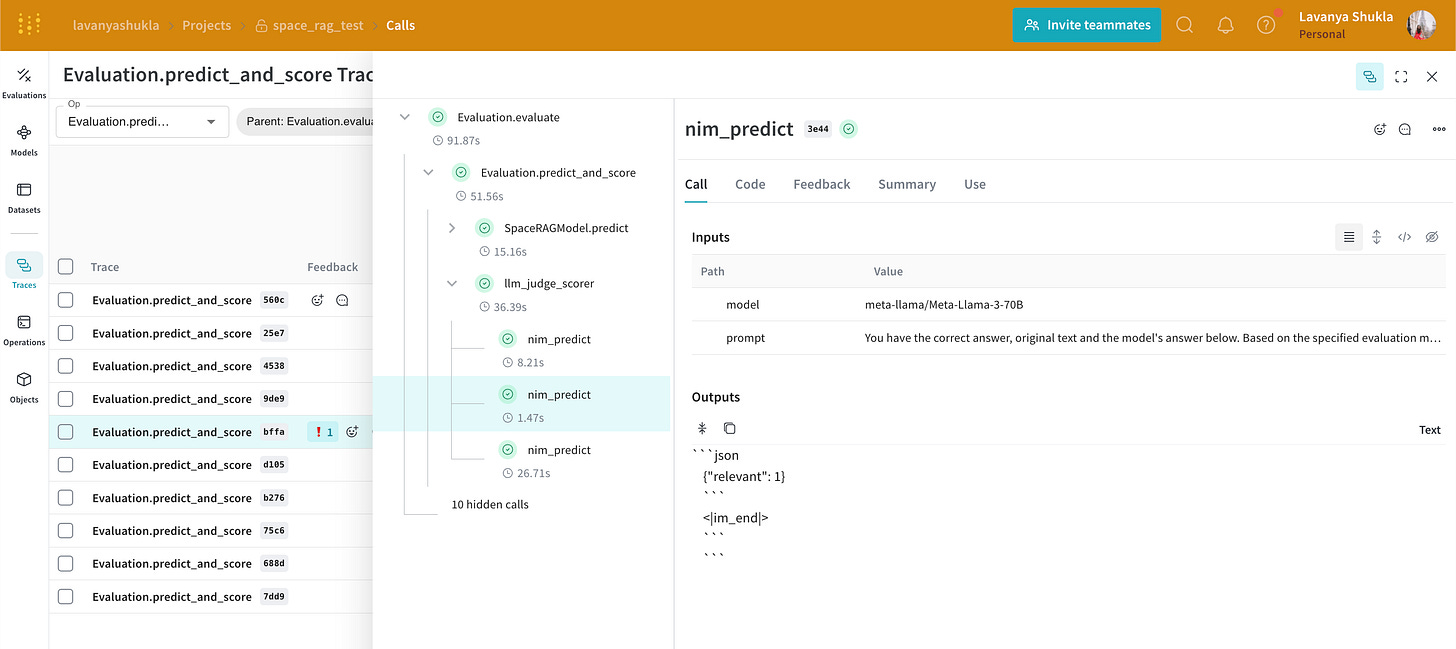

I dug into one the failed runs and saw that the model fails in a lot of ways. Sometimes it invents metrics, other times it returns invalid json, or writes code to return the right json instead of just returning the json.

I left myself a little note on the run to remind myself why it didn’t work inside Weave. I also marked the evaluation runs I don’t like with a 🔴 emoji.

I then tried Meta-Llama-3-70B and you can see in the dashboard (row marked 🟡) that the model starts to return the right three scores from my eval rubric - concise, relevant, and accurate. It was still giving everything a 4, so I tweaked my prompt to make it more descriptive and discerning.

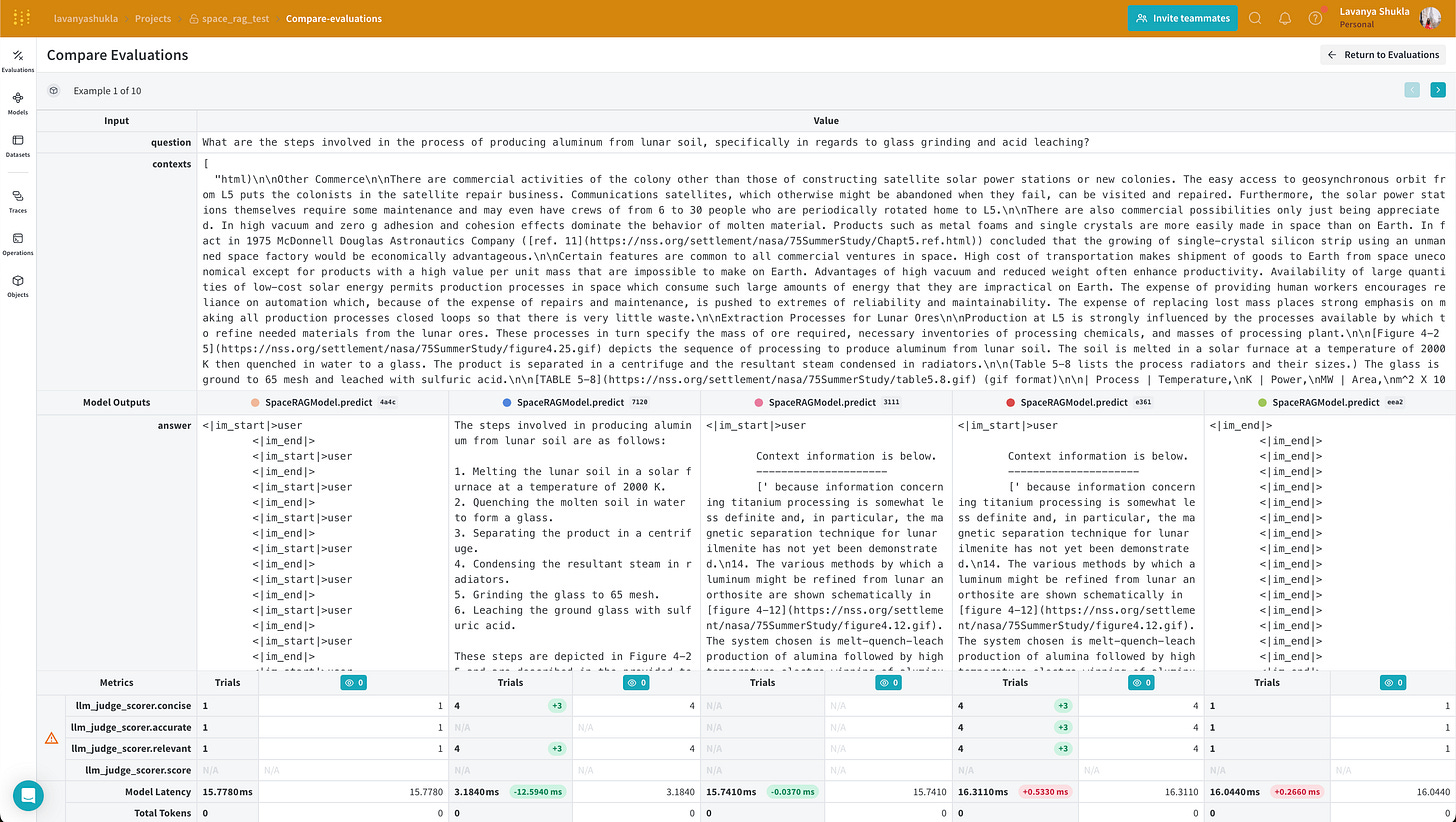

I ran the same prompt three times (the top 3 rows in the screenshot below that I annotated with a 🟢). You can see the model starts to settle on the relative scores – but there’s still a little bit of variance in the actual scores. In Part 3, we’ll build a more robust set of evaluation metrics for our RAG pipeline.

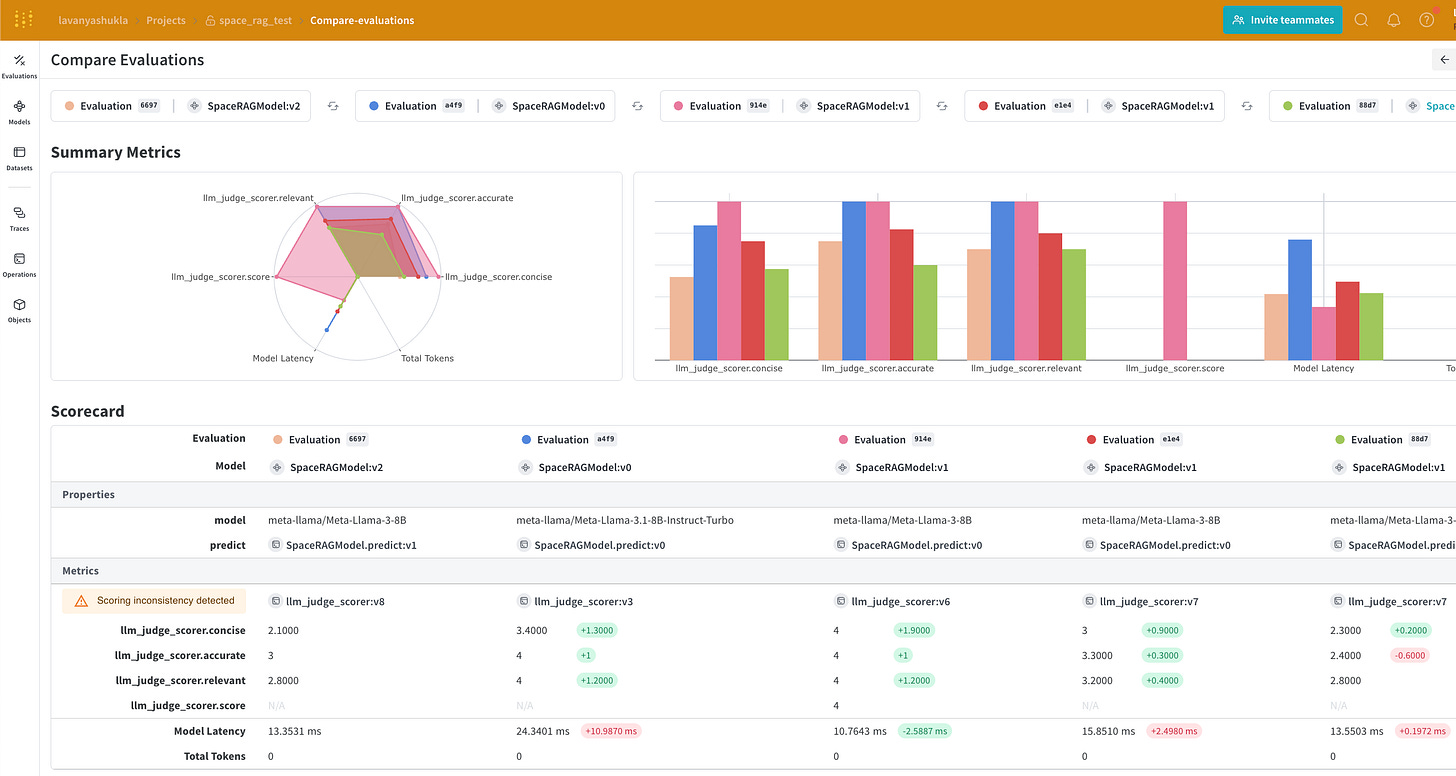

We can quickly compare all of our experiments in Weave to see all the changes we made and how they affected our evaluation metrics. You can explore this dynamic evaluations page yourself here.

And that’s all for this post! I hope you now feel empowered to build your own RAG pipelines from scratch to analyze your own datasets.

✨ You can explore the dashboards we built in this blog post here.

You can try out the tools used in this post:

Together.ai to try any model Meta’s Llama, Mistral, Google’s Gemma

If you have any questions, comments or learnings from your own experiments with LLMs I’d love to hear from you in the comments!