AI agents have significant potential to enhance productivity, efficiency, and decision-making—provided you have the right solution to confidently and securely deploy them to production. Quality, governance, security, and cost are key concerns, and leveraging agent ops to evaluate, monitor, and iterate on your AI agents enables faster progress toward your full business potential.

This blog post explores how AI agents differ from other AI systems, examines common challenges in building and deploying AI agents, and demonstrates how to overcome them.

✨ If you need help building robust GenAI applications (including agents),

checkout our GenAI Accelerator program: accelerator.cc.

What are AI agents?

AI agents are autonomous systems that can understand, plan, and execute tasks based on user instructions. They act on behalf of a user: processing data, making decisions, and executing tasks without human oversight. Agents can range from basic single-turn assistants to complex multi-agent systems capable of reasoning, collaboration, and self-optimization.

Key components of an AI agent

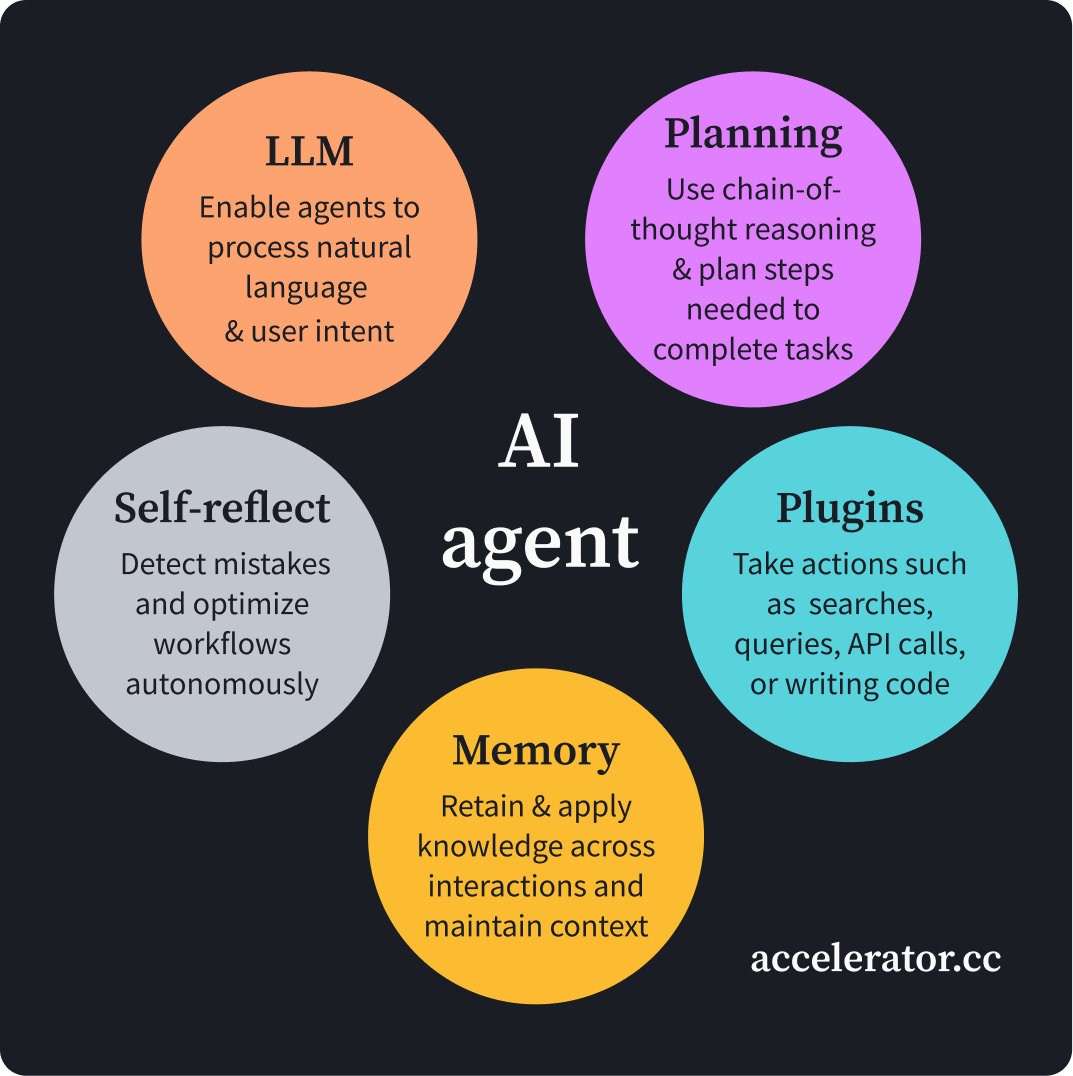

LLM (large language model): To power the agent's ability to process natural language and understand user intent.

Planning: To use chain-of-thought reasoning and plan the steps needed to accomplish a specific task.

Tools and plugins: To enable the agent to take actions such as web searches, database queries, call external tools and APIs, or even write and execute code.

Memory: To ensure the agent can retain and apply knowledge across interactions and maintain context.

Self-reflection and critiquing: To enable agents to detect mistakes and optimize their workflows autonomously.

AI agents are different from generative and other types of AI because they are autonomous, interactive, and goal-oriented. While generative AI excels at creating text, images, and music based on learned patterns, AI agents go a step further by actively interacting with their environment to achieve specific objectives. And unlike traditional AI systems that may perform templated tasks, AI agents possess the ability to make decisions, adapt to changing conditions, and learn from their experiences in real time. They can even incorporate elements of perception, reasoning, and action, enabling them to navigate complex scenarios, collaborate with humans, and manage workflows dynamically.

This autonomy and adaptability make AI agents particularly suited for operational roles, such as automating business processes, managing resources, and optimizing performance, setting them apart from other AI paradigms that focus primarily on generating tokens or executing pre-defined tasks.

Challenges of building AI agents

While AI agents bring enormous potential, deploying them in real-world enterprise environments comes with a range of technical and operational challenges. Some of the key issues businesses face include:

Quality: Ensuring the accuracy, robustness, and reliability of AI agents is challenging due to the non-deterministic nature of LLMs, which agents use for functions such as planning, memory, tool use, human interaction, and self-reflection. This complexity necessitates extensive testing with large datasets, a task for which traditional development tools are ill-equipped, as they lack capabilities such as scoring and aggregation. Furthermore, the continuous need to evaluate agents during iterations with retrieval-augmented generation (RAG) and fine-tuning techniques complicates the evaluation process.

Latency and response times: AI agents, which rely on LLMs and external APIs, may experience long response times, especially under heavy loads. Slow performance degrades user experience and can hinder time-sensitive business decisions, making latency management crucial.

Cost: Operating AI agents at scale, particularly those using LLMs, can become expensive if not properly optimized. Every API call, token generation, and inference adds to operational costs, and mismanagement of resources can lead to significant cost overruns. Efficiently allocating computational resources across multiple agents and managing their concurrent operations is critical to maintaining costs at reasonable levels.

Production monitoring: Due to the stochastic nature of LLMs, there is always a possibility for inaccurate responses and actions as well as performance issues that weren’t caught during testing. Once in production, AI agents require ongoing monitoring to detect and address failures, such as infinite loops, context overflows, or performance bottlenecks. Without proper tools, maintaining large-scale deployments can become an operational burden.

Agent accountability and decision transparency: As AI agents take on more complex, autonomous tasks, businesses need to understand the reasoning behind their decisions. Lack of transparency can reduce trust in the system, especially in regulated industries where accountability is crucial.

Security and data privacy: AI agents can be vulnerable to attacks like prompt injections or unauthorized data access. This is particularly critical in industries like healthcare and finance, where compliance with data protection regulations (e.g., GDPR, HIPAA) is mandatory.

Governance and regulatory compliance: As AI agents become more autonomous, ensuring they operate within guardrails and legal frameworks is essential. Failure to comply with regulations can result in fines, legal actions, or damage to the company’s reputation, especially when sensitive data is involved.

Multi-agent debugging: In systems with multiple interacting AI agents, coordination becomes a challenge, leading to delays and inefficiencies. Maintaining and updating multiple agents while ensuring compatibility and consistent performance across versions can be really complex. AI agents may also produce inconsistent or unexpected results, especially when dealing with novel scenarios or ambiguous inputs.

W&B Weave: An agent ops solution

Agent ops is an emerging discipline to streamline the development, monitoring, and optimization of AI agents within an organization. By offering specialized tools and a centralized system of record, agent ops enables organizations to efficiently iterate, collaborate, deploy, and manage AI agents, ensuring they perform their intended functions with accuracy and reliability.

W&B Weave is a comprehensive agent ops platform designed for AI developers to evaluate, monitor, and refine AI agents confidently. It offers a lightweight, developer-friendly toolkit to streamline the AI development process. With W&B Weave, you can conduct rigorous evaluations, stay current with new LLMs, and monitor agents in production—all while collaborating securely. Built to meet the demands of non-deterministic LLM-powered agents, W&B Weave is framework and LLM agnostic, requiring no additional code to work seamlessly with popular agentic AI frameworks and LLMs like OpenAI, Anthropic, Cohere, MistralAI, LangChain, LlamaIndex, and DSPy.

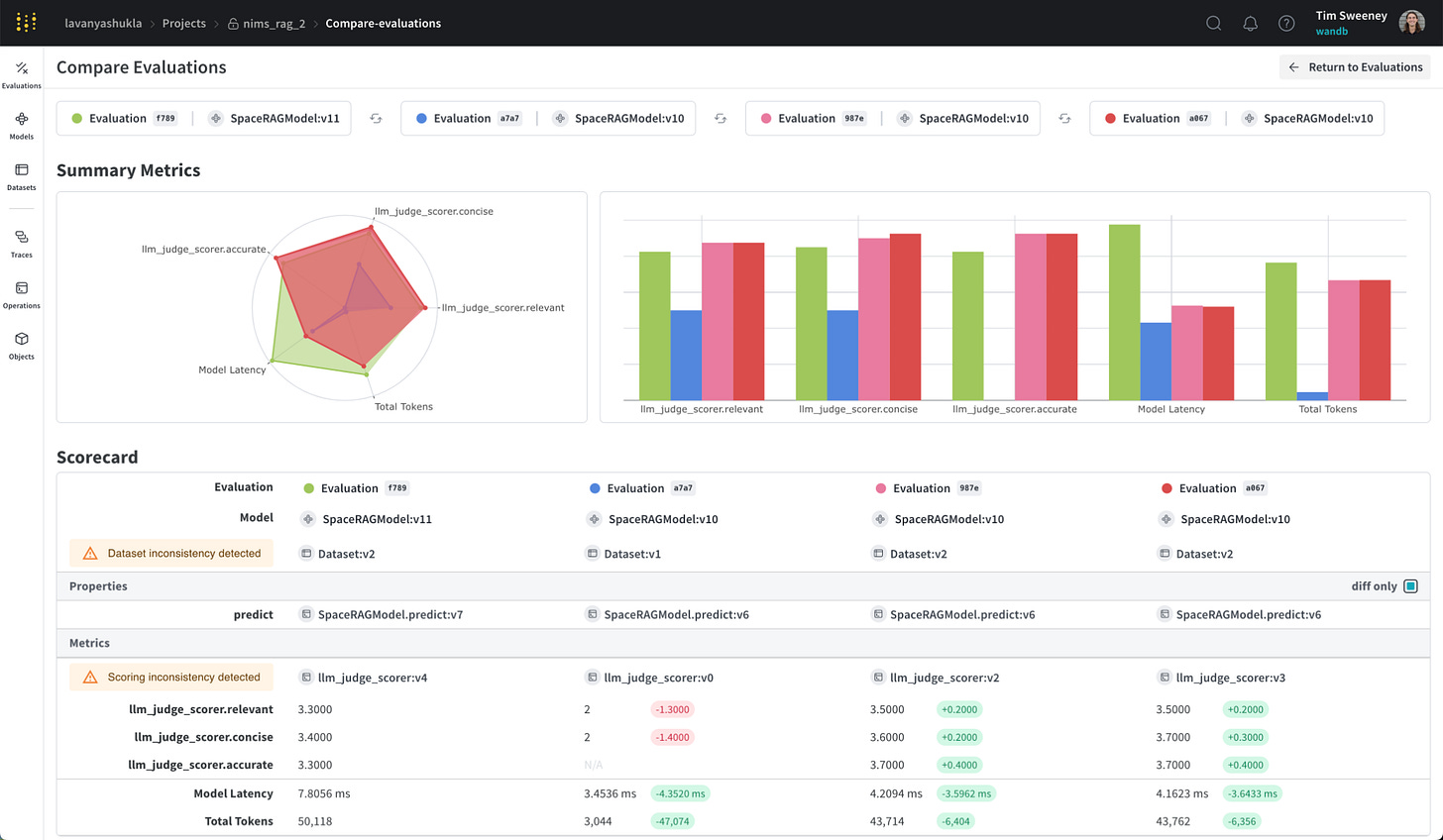

Evaluate quality throughout the development cycle: W&B Weave offers a powerful, visual comparison tool. You can compare different recipes including fine-tuning, RAG, LLMs, and datasets side-by-side for accuracy, latency, and token usage. Summary metrics are provided through radar and bar plots, and a scorecard shows your baseline and challenger. You can combine the power of Weave metrics with human feedback. Weave provides developers bring any scoring functions or write their own so they can gauge the accuracy, relevancy, robustness, toxicity, and more.

Latency & performance optimization: W&B Weave offers real-time performance metrics track latency of LLM calls, API requests, and agent actions.

Cost management & optimization: W&B Weave tracks real-time LLM token consumption, API calls, and other expenses both at the granular level as well as at the aggregate agent level. It provides insights into inefficient agent actions, allowing teams to refine agent designs for cost-effectiveness and closely monitor budgets.

Production monitoring: W&B Weave captures data in real-time and score responses live. This means you can easily spot and monitor any unusual cases and respond quickly. Continuous monitoring of agent performance against established benchmarks helps detect model drift. Custom evaluation metrics allow teams to retrain or adjust models as needed to ensure long-term accuracy and reliability.

Agent transparency and decision auditing: With W&B Weave, you get chain-of-thought traces for every LLM transaction. The trace trees help you understand how the decisions and data flow through your AI agent so you can debug and uncover the root cause of problems and identify specific failure modes.

Security & data privacy: W&B Weave enables secure collaboration between developers and domain experts with a single source of truth for agents.

Governance and regulatory compliance: W&B Weave generates audit logs that you can use for compliance reports to meet strict industry standards, crucial for sectors like healthcare and finance.

Real-Time failure detection & debugging: You can monitor agents for failures (e.g., infinite loops or memory leaks) in real time. This helps you identifying and resolving issues quickly to improve reliability.

“I love Weave for a number of reasons, and it all goes back to trust.” Mike Maloney, Co-founder and CDO, Neuralift AI

Watch the video

Read the case study

Best practices for implementing agent ops

Successfully integrating agent ops into an enterprise’s AI infrastructure requires careful planning and adherence to best practices. By following these guidelines, organizations can ensure that their AI agents are deployed and managed efficiently, with minimal disruption and maximum value.

Start small and scale gradually: Begin by implementing agent ops on a small subset of your AI agents or in a controlled environment. This approach allows you to familiarize yourself with the tools and processes before scaling up to your entire agent ecosystem.

Integrate early in the development cycle: Incorporate agent ops tools and practices from the early stages of agent development. This proactive approach helps identify and address potential issues before they become critical in production environments.

Establish clear metrics and KPIs: Define specific, measurable key performance indicators (KPIs) for your AI agents. These may include response time, accuracy, cost efficiency, and user satisfaction. Use agent ops tools to track these metrics consistently.

Foster cross-functional collaboration: Encourage collaboration between AI developers, operations teams, and business stakeholders. This ensures that agent ops insights are translated into meaningful improvements across the organization.

Invest in team training: Ensure that your team is well-versed in agent ops tools and best practices. Provide ongoing training to keep them updated on the latest developments in the field.

Develop a feedback loop: Establish a system for collecting and acting on feedback from end-users of your AI agents. Use this information in conjunction with agent ops data to drive user-centric improvements.

By adhering to these best practices, organizations can effectively leverage agent ops. This proactive approach to agent management will ultimately lead to more robust and successful AI implementations across the enterprise.

Keep pace with AI agent innovation

As AI agents become integral to enterprise operations, agent ops will be a critical discipline for ensuring their successful deployment and management. By addressing key challenges in quality, governance, security, and costs, agent ops empowers companies to harness the full potential of AI agents.

✨ If you need help building robust GenAI applications (including agents),

checkout our GenAI Accelerator program: accelerator.cc.